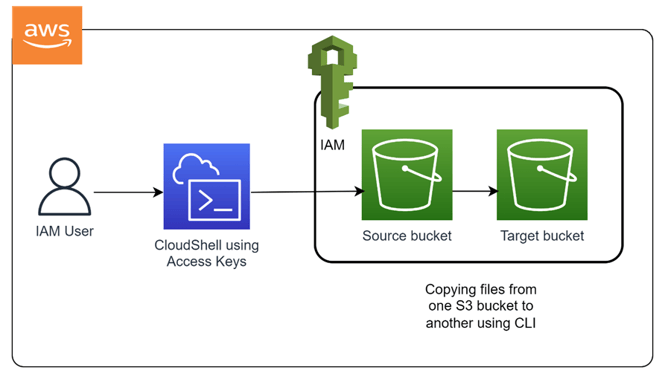

FILE UPLOAD AND TRANSFER BETWEEN THE AWS S3 BUCKETS [PROJECT - 2]

This project demonstrates a solution for uploading files to an AWS S3 bucket and transferring them between S3 buckets using AWS services. The system is designed to be scalable, secure, and efficient, leveraging AWS Lambda, S3 events, and IAM roles for seamless file management.

3/7/20253 min read

## Features

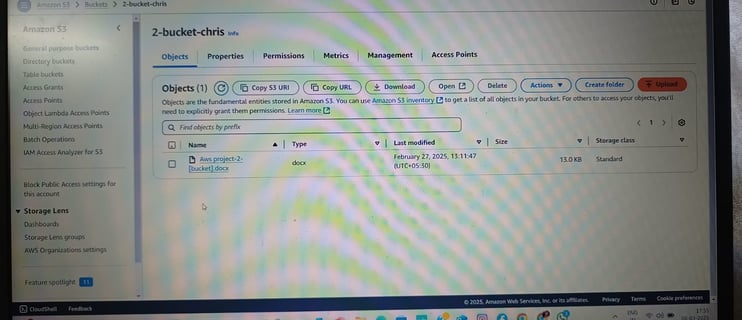

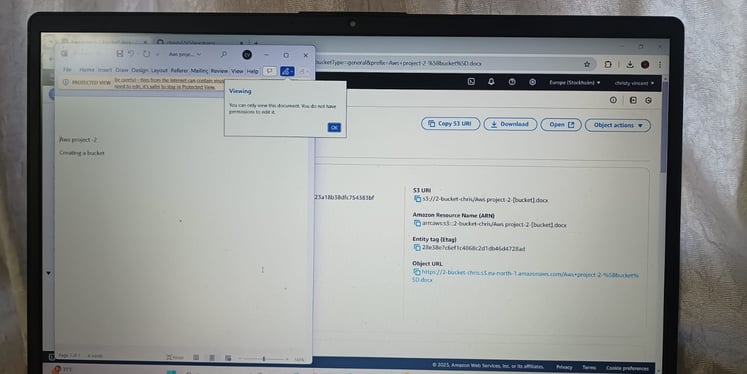

- File Upload: Users can upload files to a source S3 bucket via a web interface or API.

- Automatic Transfer: Files uploaded to the source bucket are automatically transferred to a destination S3 bucket.

- Event-Driven Architecture: AWS Lambda functions are triggered by S3 events (e.g., PutObject) to handle file transfers.

- Security: IAM roles and policies ensure secure access to S3 buckets and Lambda functions.

- Scalability: The solution is built on AWS serverless services, making it highly scalable and cost-effective.

## Technologies Used

- AWS Services: S3, Lambda, IAM.

- Programming: Python (Boto3) for Lambda functions

- Infrastructure as Code: AWS CloudFormation

## Repository Structure

s3-file-transfer/

├── lambda/ # Lambda function code for file transfer logic

├── scripts/ # Scripts for setup and configuration

├── cloudformation/ # CloudFormation templates for infrastructure deployment (optional)

├── README.md # Project documentation

└── LICENSE # License file

## How It Works

1. File Upload: Files are uploaded to the source S3 bucket via a web interface, API, or AWS CLI.

2. Event Trigger: An S3 event (e.g., s3:ObjectCreated:Put)

3. File Transfer: The Lambda function copies the file from the source bucket to the destination bucket.

4. Security: IAM roles ensure that only authorized entities can upload files and trigger the Lambda function.

## Repository Structure

s3-file-transfer/

├── lambda/ # Contains Lambda function code for file transfer logic

├── scripts/ # Scripts for setup, configuration, and deployment

├── cloudformation/ # CloudFormation templates for infrastructure deployment (optional)

├── README.md # Detailed project documentation

└── LICENSE # License file

## How It Works

1. File Upload: A file is uploaded to the source S3 bucket.

2. Event Trigger: An S3 event (e.g., s3:ObjectCreated:Put) triggers an AWS Lambda function.

3. File Transfer: The Lambda function copies the file from the source bucket to the destination bucket.

4. Logging and Monitoring: CloudWatch logs capture transfer activity for monitoring and debugging.

5. Security: IAM roles ensure that only authorized entities can upload files and trigger the Lambda function.

## Setup Instructions

1. Clone this repository:

bash

git clone https://github.com/your-username/s3-file-transfer.git

2. Deploy the Lambda function and configure S3 event notifications using the provided scripts or CloudFormation templates.

3. Set up IAM roles and policies to grant necessary permissions for S3 and Lambda.

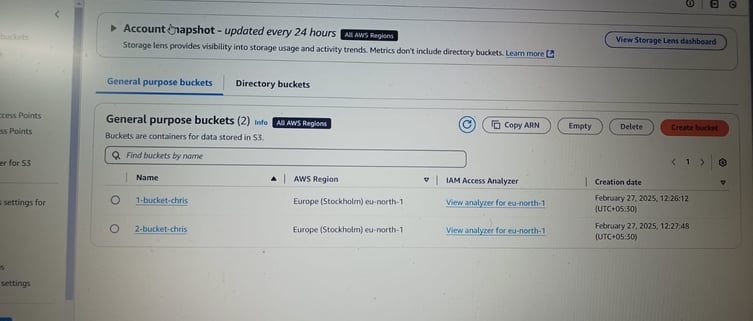

4. Configure the source and destination S3 buckets.

5. Test the solution by uploading a file to the source bucket and verifying its transfer to the destination bucket.

## Example Use Cases

- Data Replication: Replicate data across S3 buckets for backup or disaster recovery.

- Data Pipeline: Automate file transfers as part of a larger data processing pipeline.

- Multi-Region Storage: Transfer files between S3 buckets in different AWS regions.

## Contributions

Contributions are welcome! If you have suggestions, improvements, or bug fixes, feel free to:

- Open an issue to discuss the change.

- Submit a pull request with your proposed updates.

AWS EC2 INSTANCE